5. Create a Invoices Data pipeline

This tutorial is a part of the series - Guided set up.

Invoices - API Documentation

Pipeline Requirements

Pipeline Schedule - Run it once a day at 6 A.M. Eastern Time and fetch all the invoices that have been created or modified since yesterday.

Data fetch strategy: We want to fetch all the Invoice records that have been created or modified yesterday. This is Incremental dedupe strategy.

In data warehousing Invoices is qualified as a fact. Each day a large number of invoices are created and modified. Fetching the entire invoices data everyday is not possible. Hence we will be fetching the data incrementally.

In Incremental Dedupe - Only the newly created or modified data is fetched. The new data is deduped against the existing data to make sure that no duplicate records are present. Hence while running incremental dedupe we need some extra information:

- Unique Key - How do we identify a unique records. This is for the app to decide wether to update or insert the data.

- Sort Key - How to decide which is the most recent data for the same unique key and for which record/data to retain.

Data Schema requirements: If ServiceTitan adds a new field to the APIs, automatically include that.

Data Storage

Azure Blob - In the Azure blob create a folder for Invoices. Within Invoices folder create a folder for every day's data run.

AzureSQL - Create a new table - "invoices" in "servicetitan" schema and write the data to it.

Pipeline set up

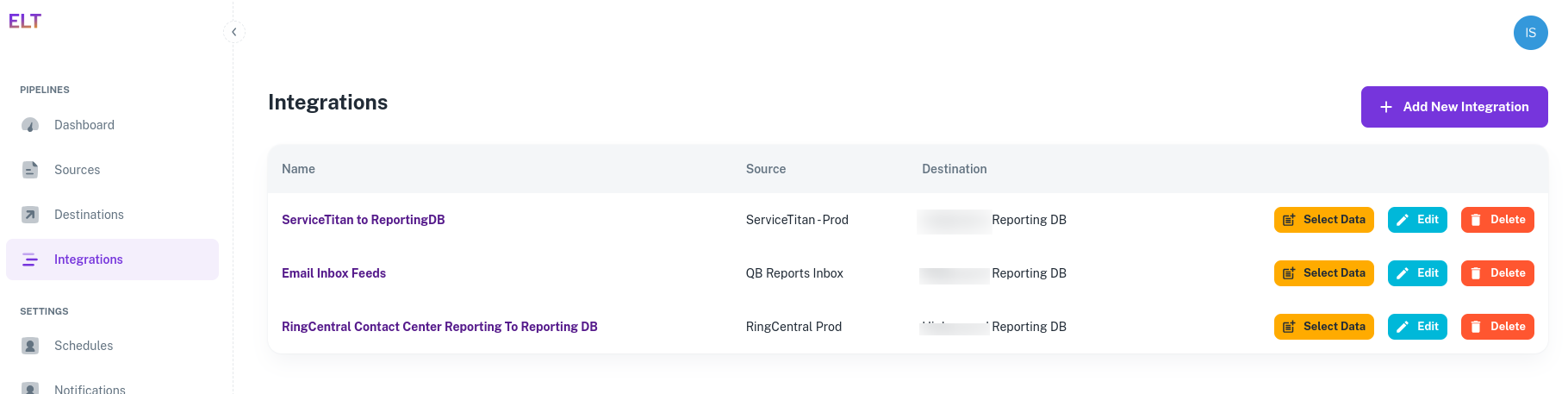

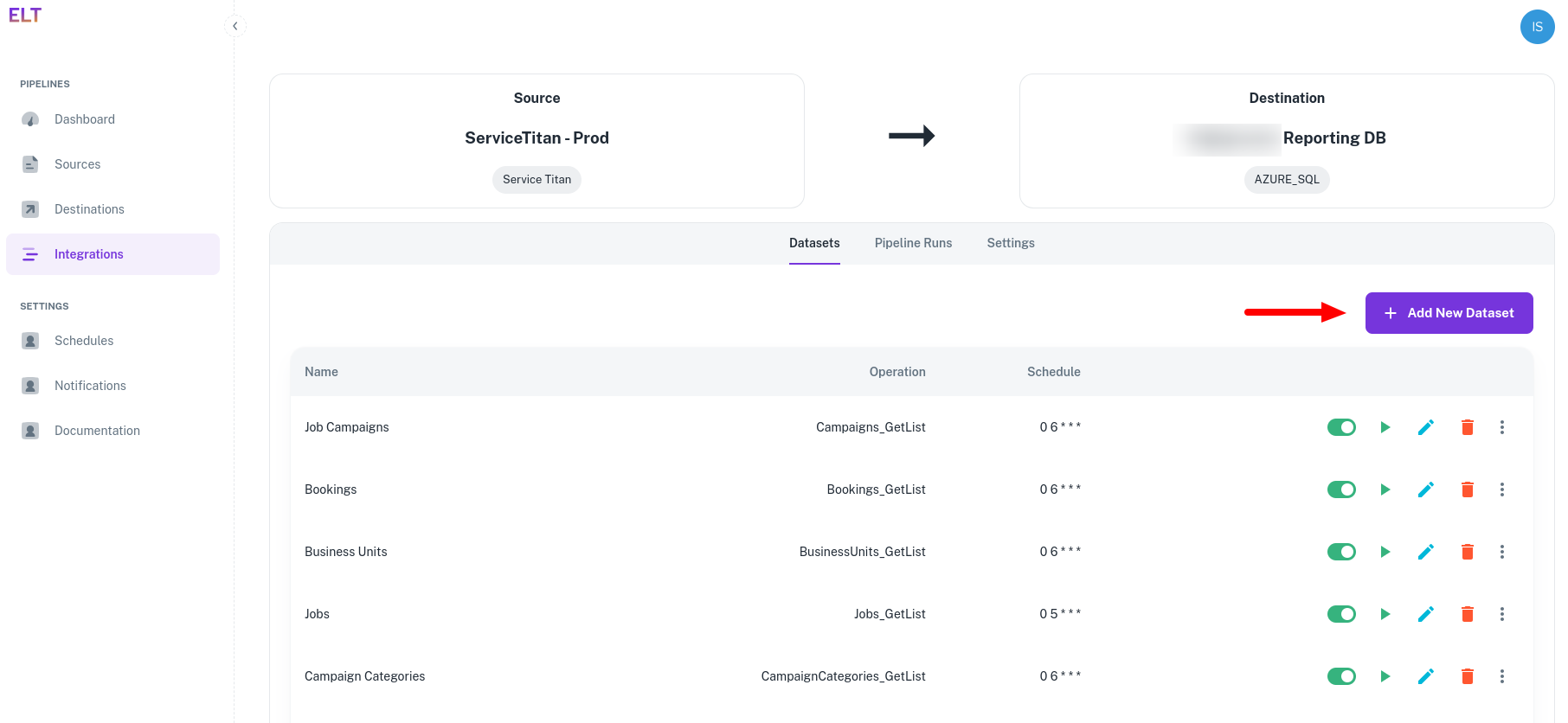

- Navigate to Integrations Tab in ELT Data and click on "Select Data" for ServiceTitan to ReportingDB integration.

- Click on the "+ Add New Dataset".

Setting up the data pipeline is a three step process.

API Details - Specify the API from which you want to fetch the data.

Connection/Pipeline Name - Give your dataset a name

Pagination - Switch it On. ELT Data automatically paginates over the APIs. If the API supports pagination, switch it On.

OperationId - Select the API from which you want to fetch the data.

Variables - These are dynamic inputs to the APIs. For e.g - date parameters or dynamic strings. These variables are used for defining the folder format too. Create the following variables

"yesterday" and provide the value -

(datetime.datetime.now().date() - datetime.timedelta(days=1)).strftime('%Y-%m-%d'). This variable will be passed to the API call. Whenever the API runs, it will resolve the variable to yesterday's date."folder_format" and provide the value -

(datetime.datetime.now().date() - datetime.timedelta(days=1)).strftime('%Y-%m-%d'). This will create a datewise folder in the blob.

The variables are python datetime functions and can support wide variety of input/output formats.

API Parameters - The API parameters are rendered based on the selected API.

Populate the ST-App-Key and TenantID. You get these values on setting up API authentication.

modifiedOnOrAfter - set it to "{{yesterday}}" - We are using the date field "modifiedOnOrAfter" since we have to fetch all the data created or modified since "yesterday". You can select any other date field too based on your business rules.

tipUsing ModifiedOnOrAfter or LastModified date is the safest way or running the pipelines in incremental mode. If you don't have a last modified column, consider using the Incremental Drop and Load strategy or write to us and we will help you with the correct setup.

Schedule and Data fetch strategy - Schedule and how do you want to overwrite the data in your warehouse.

- Select the 6 A.M. ET schedulel rr create a new schedule in the Schedules Tab under "Settings".

- Dedupe Strategy - Set it to Incremental Dedupe History

- Dedupe Keys Json Paths - Set it to

data[*].id. ServiceTitan provides an ID with every entity. This is the unique key for a record. Dedupe and Sort Key documentation - Sort Keys Json Paths -

data[*].modifiedOn. This field helps us identify the latest record amongst two records having same unique ID. Dedupe and Sort Key documentation

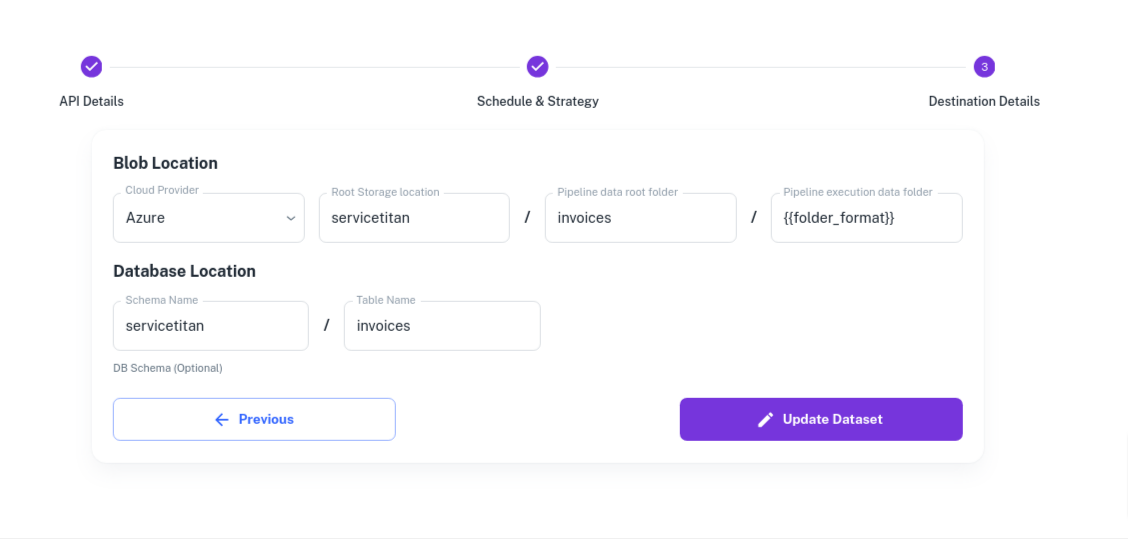

Destination Details - Destination details

Under Destination Details we have to specify where to write the data in the AzureBlob and where do we want to write the final data in AzureSQL.

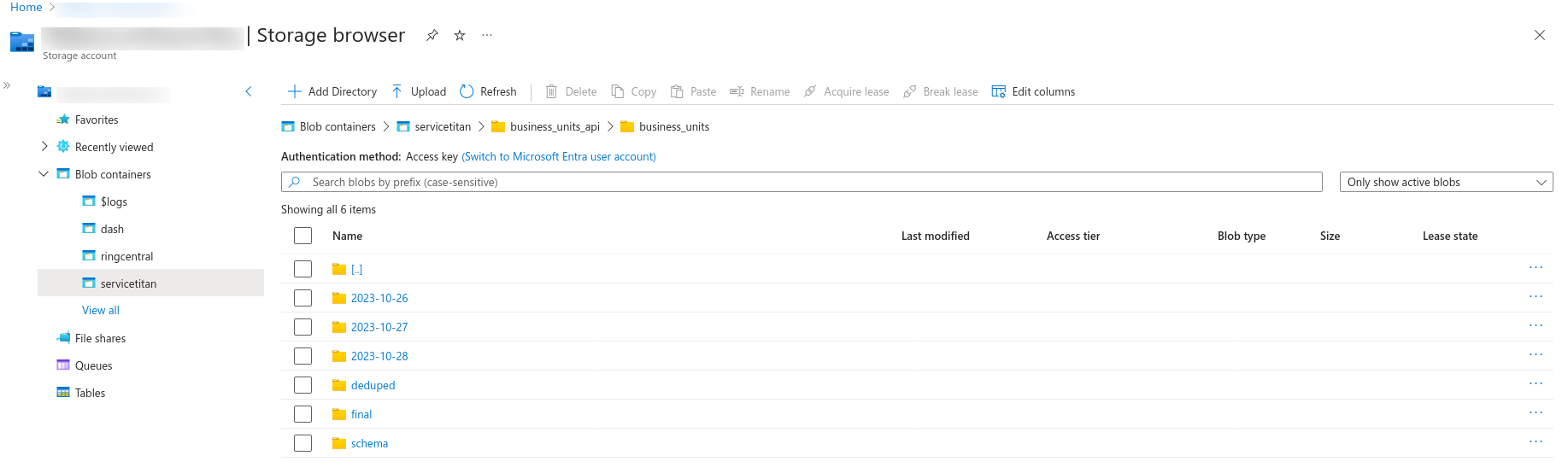

{{folder_format}}refers to the variable we created on the first page. For everyday's execution a new folder will be created using the variable{{folder_format}}and the data will be written to it.- The final deduped data ia available in - "final" folder.

- The schema changes are being tracked under the "schema" folder.

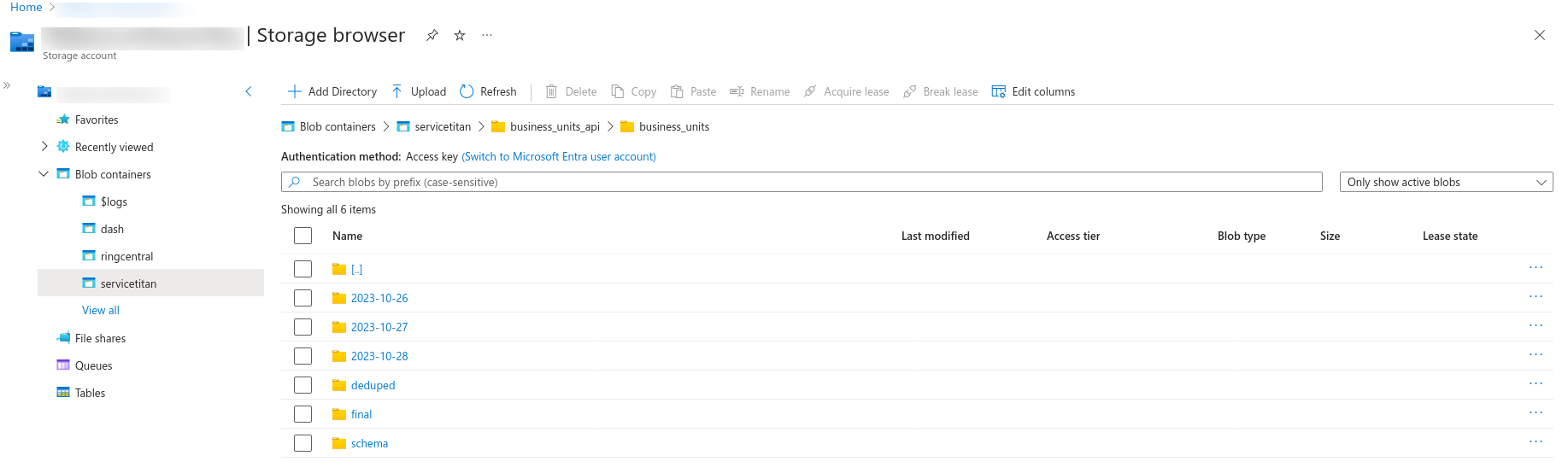

Sample output in Azure Blob.

Save the form and exit the page.

With the above steps the pipeline will run on schedule or you can navigate to the Integrations -> "Your Integration" -> Select Dataset and click on Run now to run your pipeline.

The pipeline execution status will be visible on the dashboard and the failure notifications will be sent to the emails specified in the "Notifications" tab under settings.

Pipeline Execution

The pipeline will execute in your environment. This can be audited by checking the Azure Container Instances logs.